Personality around the world

The Big Two in English, Spanish, French, Russian, Turkish, and Persian

Okay, let’s take a little reprieve from the sapience stuff. I actually had a bunch of psychometrics queued up before being pulled in by the clarion call of consciousness. It’s just so hard to look away.

I started this blog to explore the Lexical Hypothesis from a machine learning perspective. Personality models define the most-gossiped-about traits in a language, and we can measure that much better in the age of GPT. Personality models derived from either word vectors or traditional surveys come back to the same few traits, especially the Big Two: social self-regulation, and dynamism. For a refresher on this check out The Big Five are Word Vectors and The Primary Factor of Personality.

The Big Five has been found in many languages independently, but the comparison between languages is always qualitative. Researchers administer a survey of personality adjectives in Turkish or German, factorize it, and kind of eyeball the factors to see if they are the same. This data can’t be used to say “Extraversion is shifted 15 degrees away from Conscientiousness in German compared to English.” To be so precise, both languages would have to share some basis.

If you administer questions in multiple languages you can relate them by 1) finding a bilingual cohort who can answer in both languages or 2) assuming the translations of words are 1:1 (eg. fun is perfectly equivalent to divertido in Spanish). In the former case, there is a strong selection effect. What if bilingual people tend to be better educated? The latter is simply not true. In fact, the reason to factor languages together is to understand how personality structure may diverge between them. Assuming the words are the same defeats the purpose.

My research showed that you can extract personality structure from language models in English. A natural question is how that changes when you add other languages. With models trained on dozens of languages, this becomes rather painless to explore. You can map any number of languages to the same basis.

The Big Two, once again

I used XLM-RoBERTa to assign similarity between personality adjectives. Strangely, this model is a result of the genocide in Myanmar. Meta has the unenviable position where they need to remove content in places of which they have very little understanding. Technically, this is what’s called a transfer learning problem. They would like to train a hate speech classifier in English (or another well-sourced language), and then have that apply to other languages. In the dark ages of language modeling (2018) this worked very poorly. Colloquial speech in Burmese for “let’s round up the gays and kill them” looked to their classifiers like “there should be less rainbows”. This, of course, slid past their content moderation. The NYT explained the consequence: A Genocide Incited on Facebook, With Posts From Myanmar’s Military

Meta’s response was to build a language model that could better map any language (well, 100 languages) to word vectors in the same shared space. That way a hate speech classifier trained in English can better extend to other languages. (Less Burmese is needed to fine tune it.) Using this model, I embedded personality words in four languages: English, Spanish, French, and Turkish. Below are the first two factors:

These serve to separate the different languages. The first factor distinguishes Turkish from the Indo-European languages. On the second factor, the romance languages are adjacent (though also close to Turkish).

This makes sense. The model is trained to predict the next word of a sentence, so will naturally include language-specific information. If someone is talking in Spanish they do not often switch to Turkish. The hope is that there are also directions in vector-space that correspond to personality information.

If languages are fairly independent, you need at least 3 dimensions to separate 4 languages in their own non-overlapping groups. Let’s check out the next principal components.

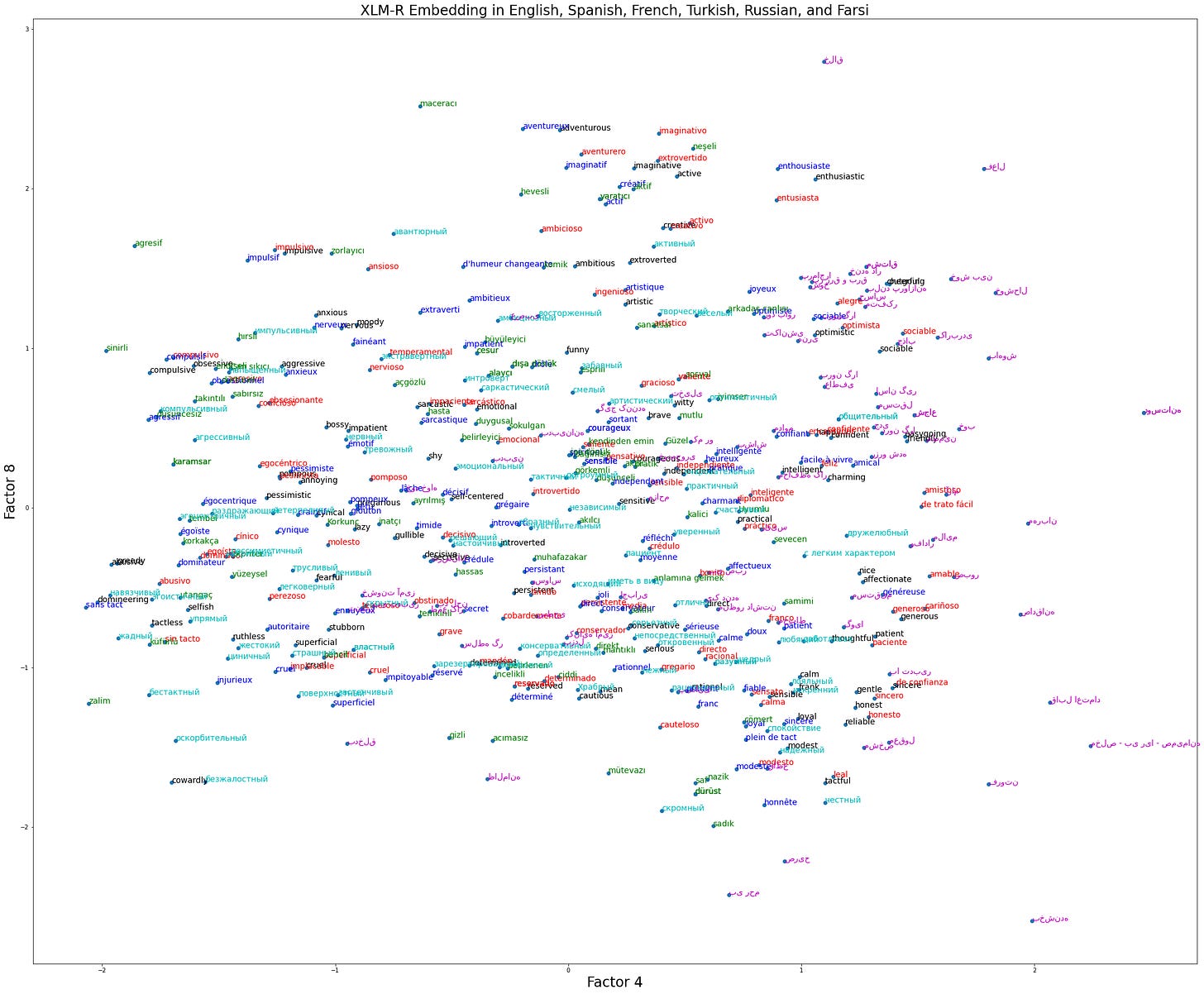

Factor 4 is the first factor that wasn’t learned to separate the languages, and it’s the General Factor of Personality! In English: domineering, ruthless, compulsive and selfish vs generous, gentle, and thoughtful. I have argued that this factor is best understood as the tendency to live the Golden Rule. The Eve Theory of Consciousness was actually a result of wondering what this would select for in our evolutionary history. Factor 5 is also about personality, plotting them together:

We get the Big Two! Factor Five (or two, of the personality factors) is Dynamism: adventurous, imaginative, and enthusiastic vs cautious, reserved, and cowardly. It’s amazing that this pops out so regularly. There are 2,500 citations on the Big Two paper, and still researchers don’t realize that they are simply the first two unrotated factors of general personality. The common belief that they somehow exist in a hierarchical relationship with the Big Five comes from researchers abandoning dealing directly with language shortly after making the Big Five inventories. Since then, any attempt to understand basic or general personality must be done in reference to the Big Five. But words came first, and language models make it easy to analyze language on that fundamental level now.

We have to go deeper

Adding Russian and Farsi yields the same factors:

By my lazy-ass engineer standards this is fairly labor intensive because it requires finding a good prompt for each language. I worked with Google Translate and native speakers to get this right, and you can see the Farsi distribution is still off on Factor 4. My guess is that my method of ignoring any factor that is not shared is too janky for this many languages. Factor 4 is probably used as the GFP, and also to separate Farsi (just a bit). There is nothing keeping these factors pure, we are really lucky that the distribution is as well-behaved as it is. Doing some preprocessing (like zero-meaning each language cluster) may solve this.

To my knowledge this is the first time multiple languages have been factorized together. This would be publishable with results on just English and Spanish and here I got up to six, including two non-Indo-European languages. It also sheds light on the nature of the Big Two, one of the most popular—and misunderstood—constructs in psychometrics.

Shortcomings

I did this research in about the dumbest way possible. I found 100 personality words in an ESL guide, and then translated those to other languages using Google Translate. If there were duplicates, I removed them. This isn’t as bad as it seems. The first two factors are virtually unchanged in English whether you use 100 or 500 words. But, if this were a real paper, you would obviously want to develop a set of words in each vocabulary independently. There are several other shortcomings:

Not enough languages! If I published this, I would like to add a dozen more languages that are not typically studied in personality science. This is, in fact, why I never got around to publishing it. That’s a lot of work and would require native speakers of several Asian languages.

Multi-lingual models warped by training data. Language models are trained to predict the next word of a sentence. If you train with multiple languages, the model will try and transfer some of the knowledge. However, for the smaller languages this could look more like their meanings being strong-armed to analogies within the better-sourced languages (English, Chinese, Russian, etc).

The queries are a researcher degree of freedom. The method I use to embed words is “My personality can be described as <mask> and [word]” where [word] is one of the personality words. Because of the way the sentence is written, the model loads pure personality information on the mask token and then embeds it. In my dissertation, I found this worked best. Of course, there are infinite variations to this, and you have to select one. Theoretically a researcher could have a particular result in mind, and then find a query that supports that. IMO it’s not too much of a risk, given how similar this result is to what survey methods produce. We have a pretty strong prior about what personality structure we find with factor analysis. This method recapitulating it is evidence the method works.

Outdated language model. I did this work over 2 years ago, long before GPT-4 came out. Simpler times.

Conclusion

If I were still in academia, this would be my research agenda. Add as many languages as possible, and try to understand all the ways the method can be biased. In the end, it may produce a universal model of personality superior to the Big Five. It would help us better understand who we are, and maybe even where we came from. For it is language that defines our species now, and it was language that forged our psyche deep in the past. We are habitually social because thousands of years ago to fail to manage your reputation was to die. Personality models are maps of language; they are vectors in the evolution of our mind.

The big two look like Galen's 4 "humors": sanguine, phlegmatic, melancholy, choleric

Totally unrelated to your post, but:

One thing that bothers me about a lot of personality psychology is that it is just stirring around in some variance of abstract words, without getting at any dynamics involved.

However, one thing that is interesting about language models is that they can sort-of kind-of bypass that by asking them to write stories. For instance if I ask GPT on OpenAI's playground to...

> Write a detailed, concrete vignette of a person acting in an abusive way.

Then it writes the following story:

---

Jane was exhausted from a long day at work and the last thing she wanted to do was make dinner. When she walked in the door, her husband, John, was sitting on the couch with a cold beer in hand.

John looked up at her and said, "What took you so long? You know I'm hungry. Shouldn't you have been home earlier to make dinner for me?"

Jane was taken aback by his tone and the fact that he hadn't offered to help her with dinner. She tried to explain that she had been working late, but he wouldn't listen. Instead, he started to raise his voice and tell her that she was lazy and irresponsible.

He then stood up and started to walk towards her, getting closer and closer with each step. Jane was terrified and tried to back away, but he kept coming closer, until he was right in front of her. She could feel his breath on her face and he was shouting at her that she was worthless and he couldn't believe he had married her.

At this point, Jane was shaking and crying, and she was too scared to move or speak. John eventually backed away and left the room, leaving Jane in a state of shock and fear.

---

This sort of thing seems like it has the potential to reveal information about personality-descriptive words that is not available when just treating them abstractly.