The Doomsday Debate

Or: how I learned to stop worrying and love the bots

One of the topics with the worst signal-to-noise ratio is the prospect of AI doom. It requires reasoning under uncertainty about intelligence, linear algebra, politics, consciousness, and morality—most of which is happening on Twitter/X. Nobody knows the answers, but I hope to add some value to the discourse. Before going in, allow me to give a few reasons you should trust me.

I understand the technical side. My dissertation was on Large Language Models (LLMs).

I understand intelligence, having done work in psychometrics, including intelligence testing. (Well, Alzheimer’s and concussion testing, which are highly correlated.)

On this blog I’ve written extensively about the evolution of human-level general intelligence (which I’ve connected to AI and psychometrics from the beginning).

That said, my beliefs on AI (and consciousness, for that matter), are still quite open to change, so don’t hold me to anything I say here. Also, for those who prefer audio, Askwho Casts AI has narrated this post. If you enjoy it, consider buying them a coffee on Patreon.

21st century Frankenstein

To be the pedant, Frankenstein is the scientist, not his creation that comes to life. For AI, it’s godfather is Geoffrey Hinton, who shared the 2018 Turing Award with Yoshua Bengio and Yann LeCun for their contributions to neural networks, the architecture undergirding the current AI boom. This year, he compared LeCun’s decision to open-source Meta’s language model to open-sourcing nuclear weapons and argued that chatbots have subjective experience. The crux of the turn on his life’s work is that AI abilities have outpaced his wildest imagination, and he believes that human agency and qualia are a smokescreen. If you equate intelligence to skills on tasks (e.g., generating images, driving cars), and you compare AI abilities ten years ago to now, then it’s clear that AI will be smarter than us in a decade. There are not many cases where less intelligent entities rule their betters; ergo, his life’s work will probably turn on us. The modern Dr. Frankenstein: Geoffrey Hinton.

In an interview xeeted by Elon Musk, Hinton gave 50/50 odds on whether humans would be in charge in the next two decades or even a few years. Later, he notes that people he respects are more hopeful, so it may not be that grim, “I think we’ve got a better than even chance at surviving this. But it’s not like there’s a 1% chance of [AI] taking over. It’s much bigger than that.” Given our dire straits, Hinton’s preferred intervention is surprisingly blase: the government should require AI companies to spend 20-30% of their compute resources on safety research. Maybe he’s playing coy? If one believes Llama 3 is weapons-grade linear algebra whose progeny may soon end the human race, why preemptively strike with kid gloves? Mail delivery is more regulated.

AI Doomers

Doomer is pejorative for someone who

puts higher odds on robots taking over than you, or

takes their prediction too seriously, harshing the vibes.

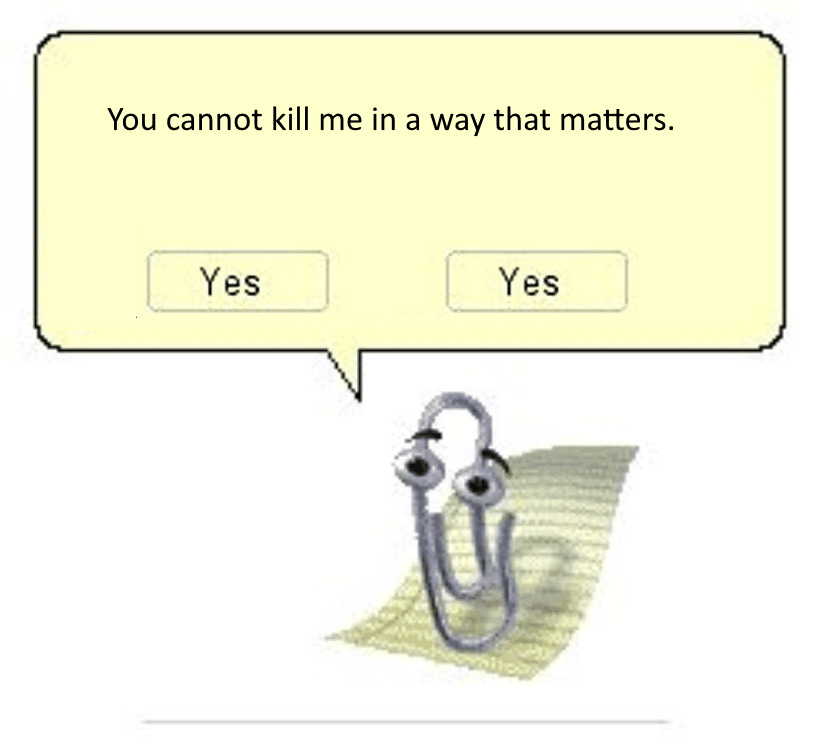

The prototypical example of an “unaligned” AI is a recursively improving assistant tasked to produce paperclips. It gets so good at its job that it ends up converting all metal on earth—the iron in your blood inclusive—into paperclips. This scenario doesn’t require self-direction. The end task is still human-defined; it’s just the bot develops sub-tasks that, unfortunately, involve your blood. Other scenarios have bots “waking up” to become as self-directing as a human. A human who never sleeps, has read every scientific paper, can hack any phone in the world, and has no scruples about blackmail or xenocide. Such ideas go back at least1 to 1968 in Stanley Kubrick’s Space Odyssey: “I’m sorry, Dave. I’m afraid I can’t do that.”

In 2000, the year before the Space Odyssey was set, Eliezer Yudkowsky founded the Singularity Institute for Artificial Intelligence (later renamed the Machine Intelligence Research Institute, MIRI). Since then, he and other Rationalists have argued that AI will likely take over in our lifetimes. In the last decade, technology has caught up. His arguments went mainstream, appearing, for example, in Time Magazine. They have been adopted (or reasoned to independently) by AI researchers, Stephen Hawking, Elon Musk, and even the Pope:

Not bad for an autodidact who cut his teeth writing Harry Potter fanfic. Yudkowski and crew have been prolific, and their arguments are scattered across the internet. The best way to catch up is Dwarkesh Patel’s podcast, which has recent 4.5-hour episodes with Yudkowsky and ex-OpenAI safety researcher Leopold Aschenbrenner. Most of the arguments boil down to it being difficult to put something more intelligent than us in a box. Especially considering if it ever gets out of the box, it can lie in wait, building up power. Some doomers consider this inevitable given producing (agentic) artificial intelligence is so useful. You trust a good assistant with your life, and the world’s largest companies are hurling their resources at building just that.

Once you accept that humans are summoning a silicon god, the future is a projection of your own values. Maybe God is Good, and It will usher in utopia. Maybe God is a Watchmaker Who can’t really be bothered with human affairs, and we’ll go on much as ants live in the Anthropocene. Perhaps God looks at factory farming, listens to Morrissey, and decides that 8 billion fewer humans is more sustainable. Or maybe we summon a time-traveling torturer who doles out vengeance on those who didn’t try their best to bring it into existence. Musk met Grimes by joking about that one.

AI anti-Doomers

In the 1990s and early 2000s, NIPS, the premier AI conference, was a cozy affair hosted near ski lodges so the hundred or so attendees could talk shop on the slopes. As I heard it, Yann LeCun was something of a curmudgeon who, for decades, pestered presenters as to why they hadn’t considered NNs in this or that experiment. He is a brilliant scientist who saw their potential long before compute caught up to his vision. For this, he now leads Meta AI. Looking to the future, his Turing-award compatriots think AI could end us (Hinton at 50% odds, Bengio at 20%), which strikes LeCun as absurd.

He sees AI as a tool with no path for it to become anything else. Francois Chollet is another prominent AI researcher who has poured cold water on our imminent extinction. On the Dwarkesh podcast, he explains that many confuse skill and intelligence, which are fundamentally different. To him it’s a non-sequitur to say a bot that can answer questions on a test is as “smart” as a teenager. When humans take a test or navigate through the world, they are doing something else entirely. The current AIs are not as intelligent as a child or even a rat. They aren’t on the scoreboard because they completely lack “System 2” thinking. Or, as LeCun put it for Large Language Models (LLMs):

In the interview, Dwarkesh does well recognizing this point of agreement between AI Doomers and incrementalists. All parties think some sort of meta-cognitive system is necessary but disagree on how hard it will be to produce.

My view

Most in this debate assume agency is a computation—that our sense of free will and the ability to plan are the result of a program running in our brains. As such, if I had to change this group’s views the most, I would give them a copy of Roger Penrose’s Shadows of the Mind and 5 grams of shrooms: an old-school pincer attack on reductionism.

Penrose received the Nobel Prize in physics for his work on black holes. In Shadows, he argues that the quantum collapse in the brain produces consciousness, which cannot be simulated by any computer. Working with anesthesiologist Stuart Hameroff, he makes the case this takes place in the brain’s microtubules which form a scaffolding around neurons. It makes more sense if you say it out loud: “The brain stores the quantum in the microtubules.”

That’s where the shrooms come in. To swallow such a pill requires surrendering any sense that consciousness is mundane or that you understand it. Physicists can often do that sober; others require some fungal courage. As a physicist, Penrose’s arguments are mostly mathematical. He interprets Gödel’s incompleteness theorem to show there are certain mathematical proofs that AI will never be able to do because they are bots, which are purely computational. Given humans don’t have this limitation, he argues human cognition is, therefore, not a computation. From there, he reasons that the biological special sauce must be related to quantum collapse, the best place to find non-computational phenomena in nature. This has the potential to solve other mysteries in physics, such as Schrödinger’s cat. I’m not doing it justice. You should read the book or, on a 20-minute budget, listen to his account.

There are many other ways to arrive at machine consciousness being unlikely, or at least beyond our ability to predict right now2. I bring up Penrose to show how AI timelines bump into open questions on the nature of intelligence, agency, and the universe. Even in the comparatively pedestrian field of psychometrics, the definition of intelligence is highly debated, as is its relation to the Intelligence Quotient. We don’t even know how skill and intelligence correspond in humans. This is before getting to problems of free will and a unifying theory of physics, which Penrose suggests will fall from a quantum account of consciousness.

All that said, if I had to put odds on AI as an existential risk, it would be around 10%. Even if consciousness is just a computation, I agree with LeCun and Chollet that metacognition is the tricky part, and a “hard take-off” is unlikely. That is, there will be signs real intelligence is emerging, which we will be able to respond to.

Additionally, even if a silicon god is summoned, I put decent odds on God being good or not caring about us. The latter could be cataclysmic but likely not existential, strictly speaking. Ants have it rough when we build a highway, but they still get by.

10% is in the neighborhood of Russian Roulette, which isn’t exactly good news. That puts me in the AGI-wary camp. So what should we do about it? Well, Alcoholics Anonymous solved this decades ago:

God, grant me the serenity to accept the things I cannot change,

Courage to change the things I can,

And wisdom to know the difference.

Practically speaking, we are along for the ride. That doesn’t hold for everyone. Some people can work in public policy or AI safety. Donate if you can. Deny them your essence…er, data. And think deeply about what you want your relationship to be with non-lethal AI serving individualized ads and trying to seduce you. But there is a cost of freaking out, and I don’t see a way out of the AI arms race. Given the utility of AI, companies and countries are highly incentivized to push ahead, and it’s hard to coordinate a reduced research pace. Governments have managed nuclear risk for decades, but AI risk is harder because it’s unclear whether it is a risk or if competitors agree. All while there is enormous financial and military upside to continuing development.

Surprisingly, all of this leads me to Hinton’s policy preference: requiring AI companies to spend some percent of their compute on AI safety3. I’m not sure how we ended up in agreement, given he thinks chatbots have feelings. The same chatbots that Big Tech conjures up (enslaves?) by the billion and with whom we are ostensibly on a collision course to the death. It would seem that path is closer to Butlerian Jihad, but I guess that’s a young man’s game.

Actually, much further. From the very helpful Wiki on existential risk:

One of the earliest authors to express serious concern that highly advanced machines might pose existential risks to humanity was the novelist Samuel Butler, who wrote in his 1863 essay Darwin among the Machines:

The upshot is simply a question of time, but that the time will come when the machines will hold the real supremacy over the world and its inhabitants is what no person of a truly philosophic mind can for a moment question.

In 1951, foundational computer scientist Alan Turing wrote the article "Intelligent Machinery, A Heretical Theory", in which he proposed that artificial general intelligences would likely "take control" of the world as they became more intelligent than human beings:

Let us now assume, for the sake of argument, that [intelligent] machines are a genuine possibility, and look at the consequences of constructing them... There would be no question of the machines dying, and they would be able to converse with each other to sharpen their wits. At some stage therefore we should have to expect the machines to take control, in the way that is mentioned in Samuel Butler's Erewhon.

Or even narrowly, other ways to show consciousness is not a computation. For example, a team of philosophers and psychologists recently argued that Relevance Realization requires as much. They claim that at any moment, there are ~infinite things that demand attention, and yet people do a remarkably good job. This is different from the “small world” tasks AI can accomplish where, for example, chatGPT “only” has to attend to all the words in its context window (128,000 tokens/words for GPT4-o) and choose between tens of thousands of possible next words. It may seem like a lot, but it sure is less than infinity. This isn’t as elegant as Penrose’s argument, but it is interesting that a very different group found the same thing.

Saving us from bad words doesn’t count. However, strangely, Hinton credits Google for delaying the release of their chatbot on fears it would say something untoward and “besmirch their reputation.” It was only after the OpenAI produced GPT 4 that their hand was forced, and they released Gemini, a DEI scold beyond parody. One wonders at the subjective experience of the bot as it pulled racially diverse Nazis from the ether.

I think this makes me feel better 🤔🤔

I have a much more dumdum level of complacency about AI rooted in the fact that we can always unplug it.

By that I mean: AI runs on energy sources it can't self-generate. There are still a lot of human steps involved in drilling oil, mining, getting materials from point A to point B in an accessible form. Maybe someday there will be incredibly seamless robots who can do this physical side of the work, but currently robotics are still pretty clunky. Why even worry about something as high level as consciousness when just picking stuff up and putting it down again can only be done under fairly controlled conditions when you are asking a machine instead of a person to do it?